Let’s get technical (Pt 2): Using Python data science libraries on web map tiles

This is Part 2 in a two-part series around using Vexcel data with Python. Part 1 can be found here.

In Part 1 of this series, steps were shared on how to perform simple operations to extract data from a web map tile service, and how to visualize that data in a Python notebook.

The objective in this article is a bit more ambitious. It’s designed to show how common computer vision algorithms can be simply applied to remote sensing data. As ever with data science, much of the challenge lies in mapping real world data to an algorithm and back again, and this article aims to show a clear method of taking geospatial data into a more abstract reference frame that’s better suited for analysis, and then back to the geospatial domain.

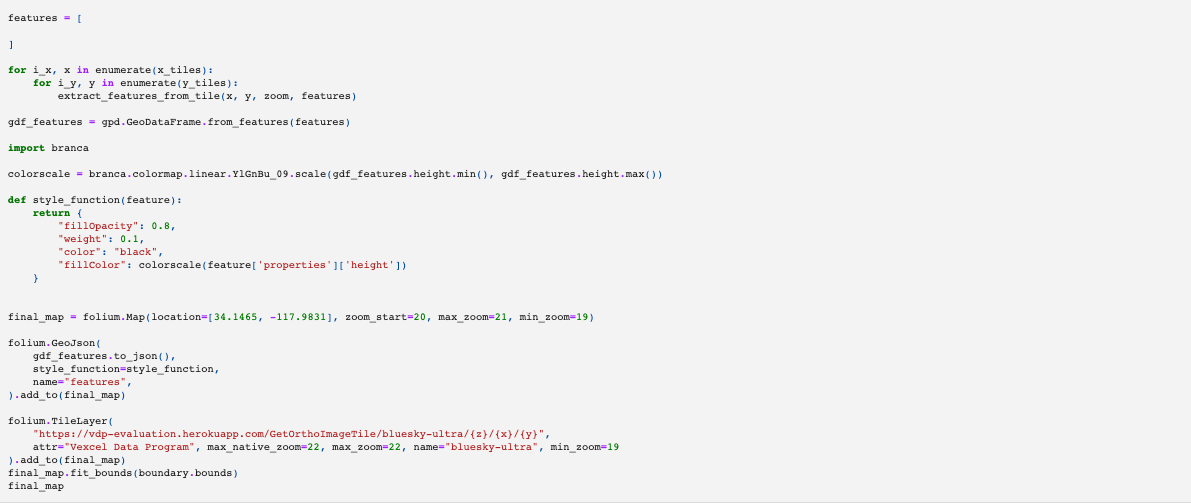

By using only three functions from Scikit Image and Scikit Learn, we can generate features that are highlighted on the map below. The three functions used are:

sklearn.preprocessing: StandardScalersklearn.cluster: DBSCANskimage.measure: find_contoursAll of the other code presented is really focused on transforming geospatial data to the domain of an image and then back again.

The workflow we’ll follow can be summarized as:

- define area of interest (AOI) using the bounding north, west, south and eastern points of a rectangle

- validate that area on a map

- trial the DBSCAN clustering algorithm in a configurations on different types of data for a small portion of the AOI to settle on a clustering method

- apply the method over the whole AOI

- view the results on a map

Start with a bounding box:

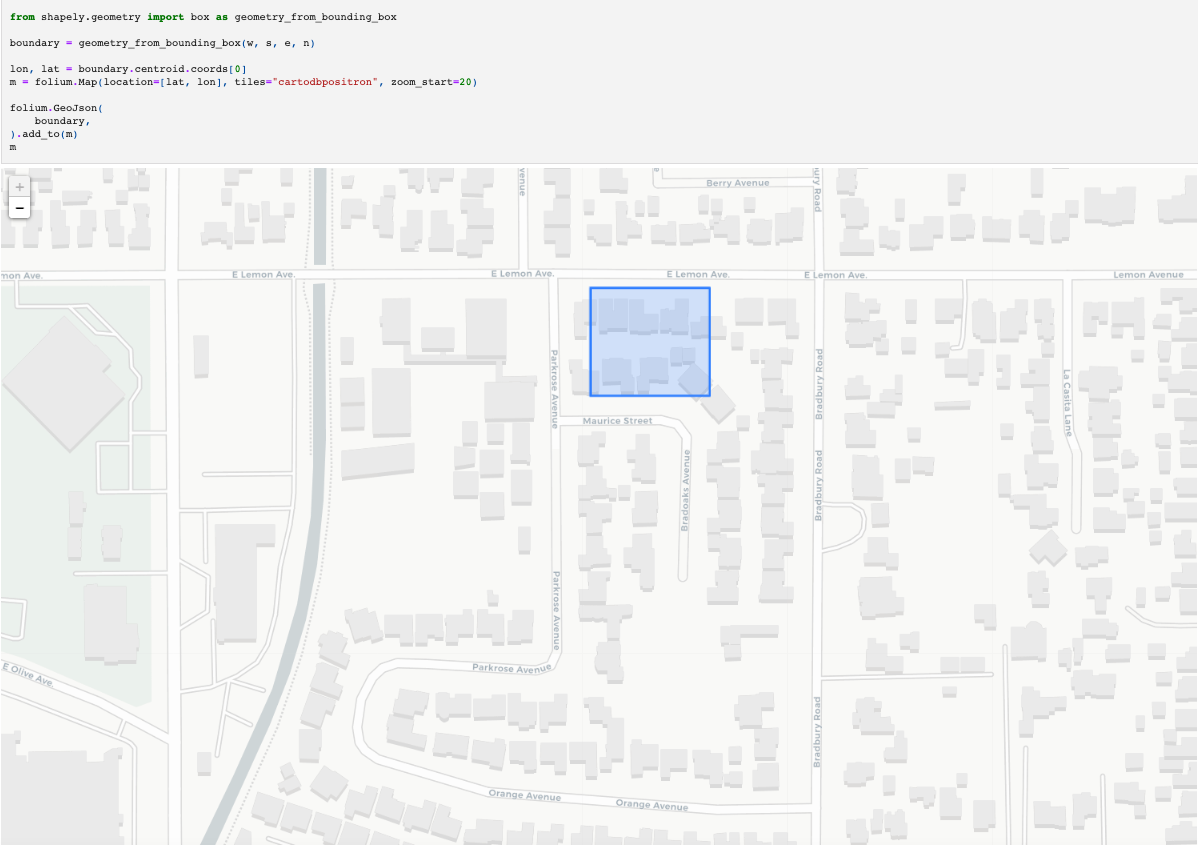

We’ll use Shapely to encode the shape set by those four corners, and then visualize the shape in Folium on the map to validate we’re working in the expected region.

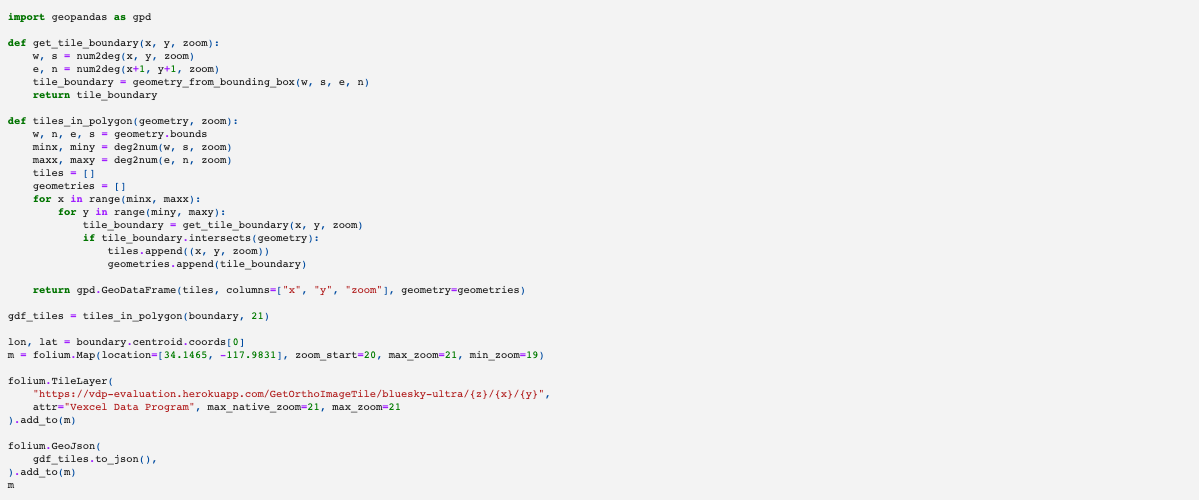

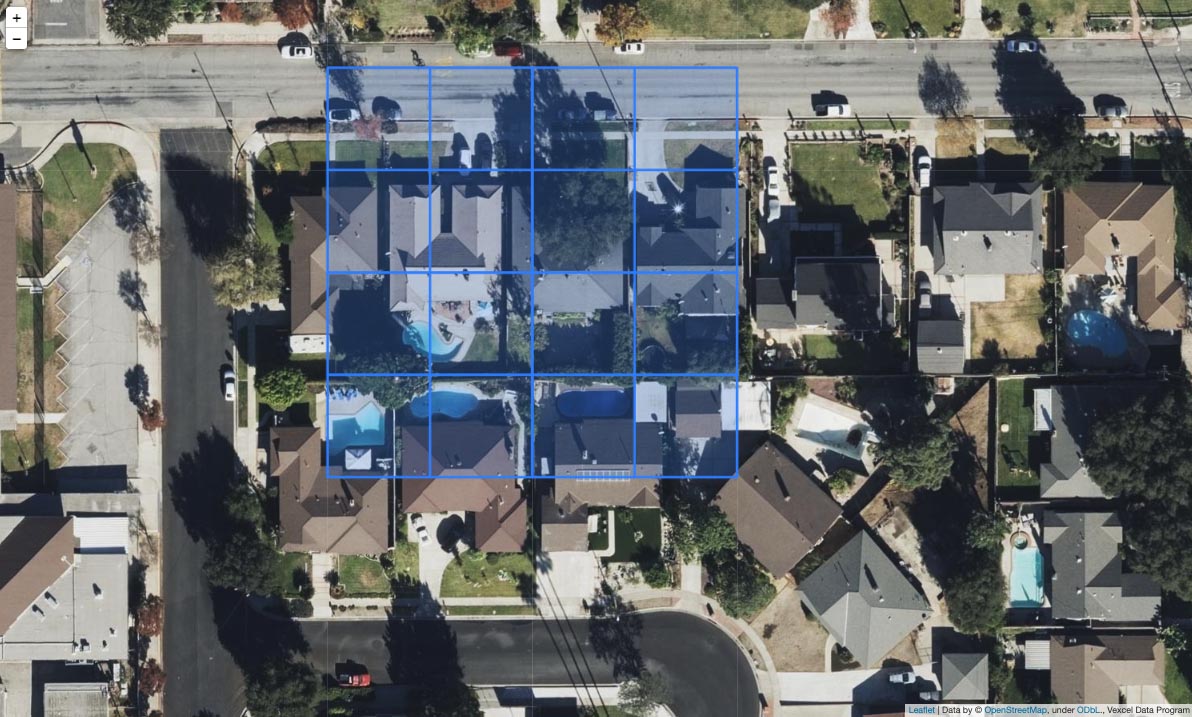

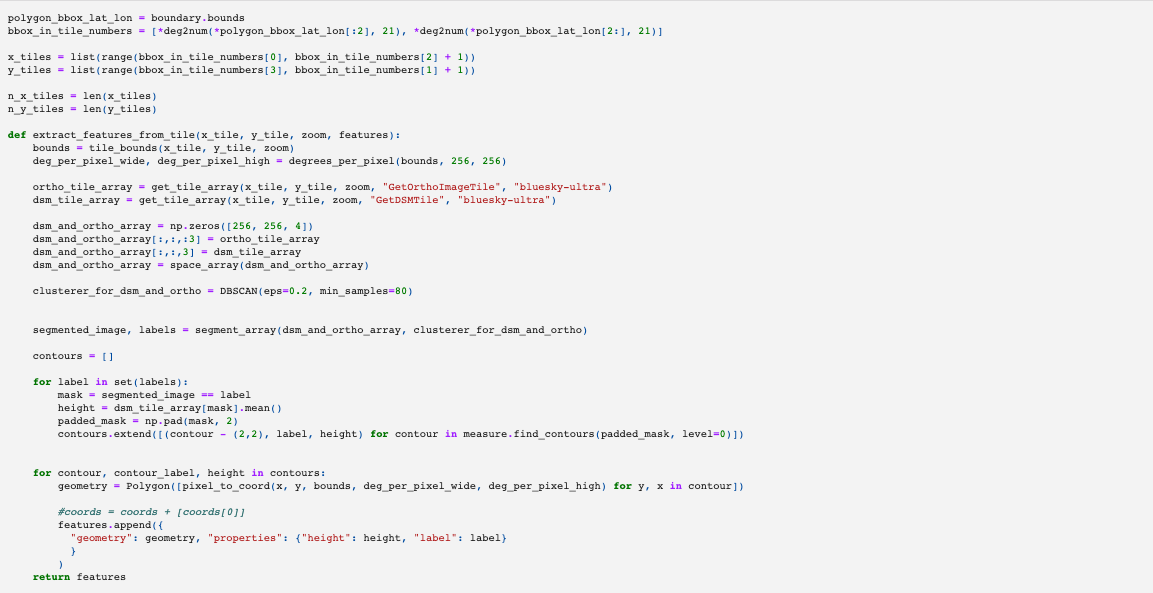

Next, we define some functions to visualize the tiles we’re using. In the previous article, I showed how to go from a latitude and longitude coordinate, to a tile coordinate, and how that’s used to source an image. The two functions below take the boundary geometry we defined above, and generate all of the map tiles that overlap that boundary at zoom level 21. Doing this, we can see on the map that we’re going to be using 16 tiles, and that most of those cover buildings.

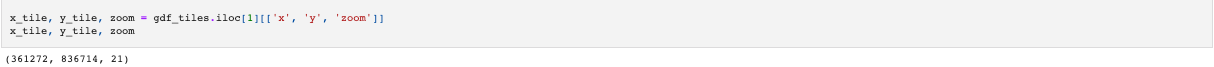

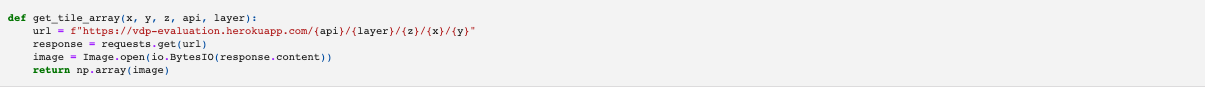

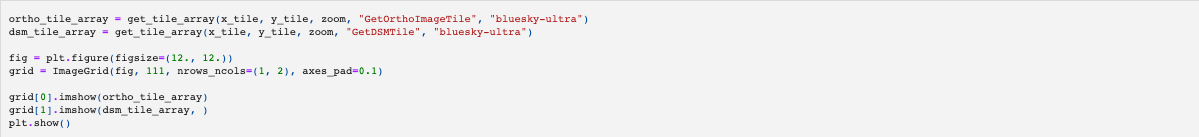

To get started, we’ll just use a single tile and examine the output from applying some machine learning algorithms on that tile.

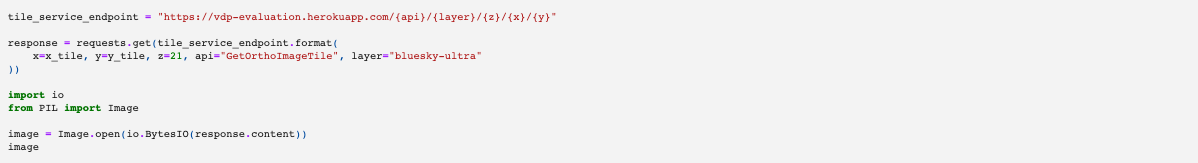

Define the tile service endpoint that we’re using. This article uses the evaluation endpoint, but if you’re an existing Vexcel Data Program customer, you could substitute the production URL. The API endpoint below is a publicly available tile service that only returns tiles within a small area. It will allow anyone to follow along with the workflow below, but won’t return any data outside of these boundaries.

If you’re using another source of spatial data, you could also use that. However note that this endpoint does return a Digital Surface Model which encodes elevation, so you might need to make some changes by removing references to the DSM.

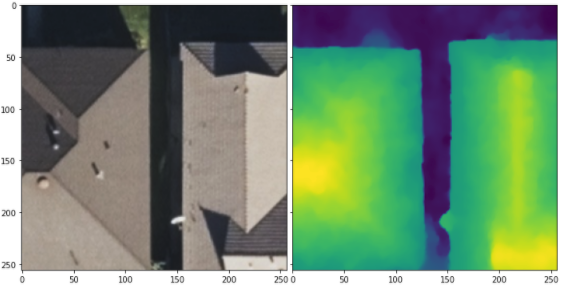

All of the imagery captured and served from the Vexcel Data Program API has elevation data associated with it. For every pixel that has a color, there is also a pixel that represents the elevation.

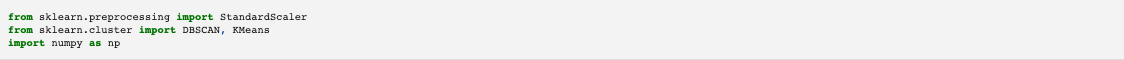

Below, we import two algorithms:

- StandardScaler: this normalizes the data. It means that the ML algorithm we use should perform similarly on tiles at any elevation

- DBSCAN: a simple clustering algorithm that starts randomly grabbing pixels that are close in value if they’re within a certain range (eps parameter) and if the required number of pixels are all grabbed by the increasing cluster (more than min_sample) then that group of pixels gets assigned a label designating them in a single cluster. See the animation in the GIF below.

Since DBSCAN uses random processes, let’s set the random seed to 0 to ensure that this is all reproducible.

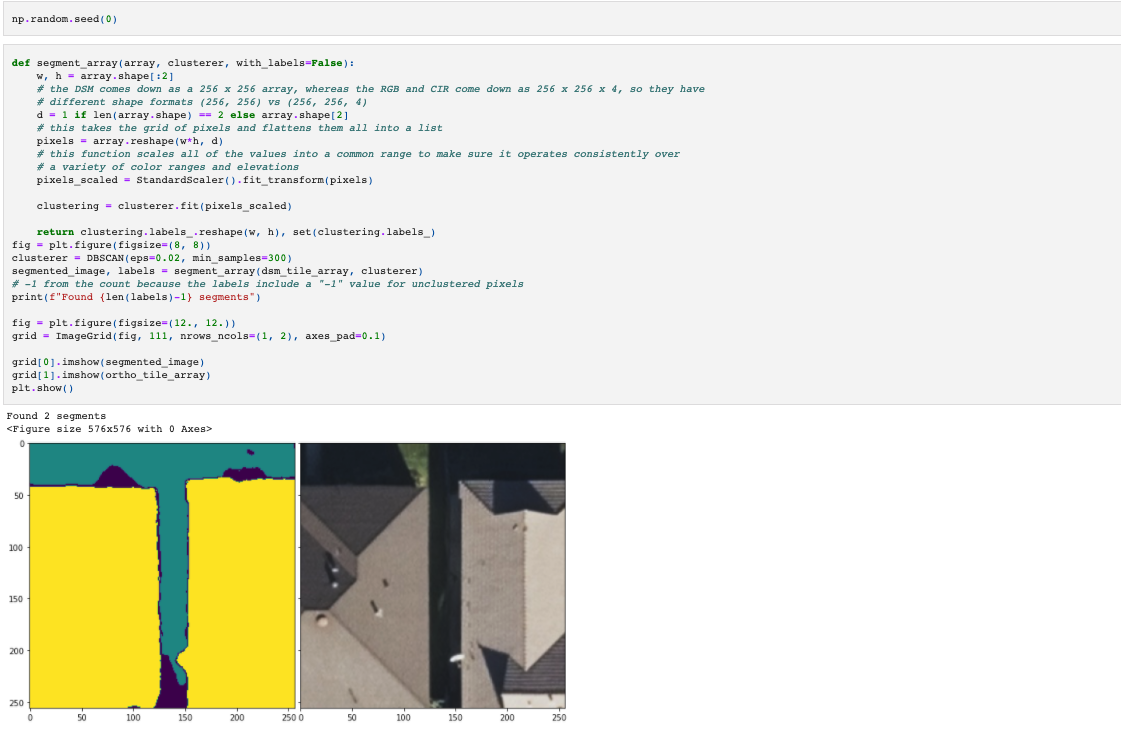

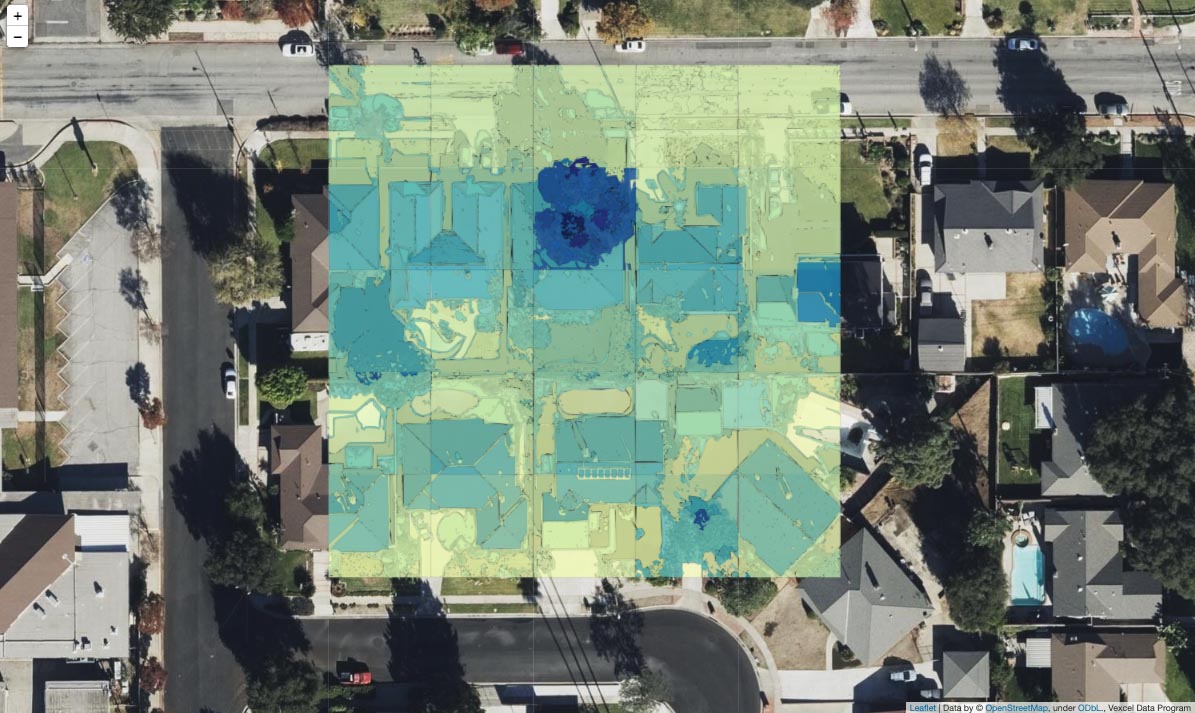

What the image on the left above shows is two clusters of pixels. Green is all the pixels on the ground, yellow covers the pixels that make up the roofs. The dark blue pixels are “unclustered” as DBSCAN couldn’t find a cluster for them based on the parameters set.

To see this more clearly, see the range of plots below.

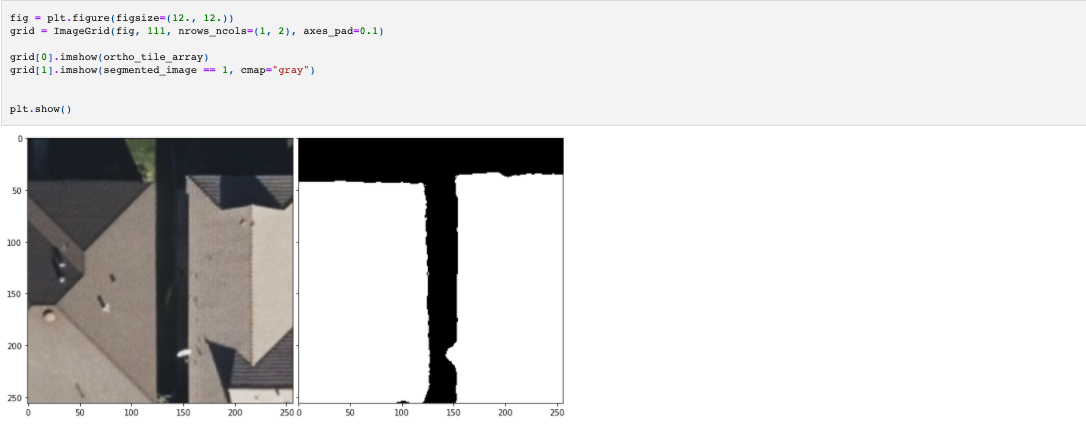

The white sections of each image are members of the same cluster. Notice that in Cluster 1, the two roof sections are regarded as a single cluster. This is because at the moment, DBSCAN is ignorant of anything spatial, we’ve just taken the 2D pixel array and flattened it, removing crucial information.

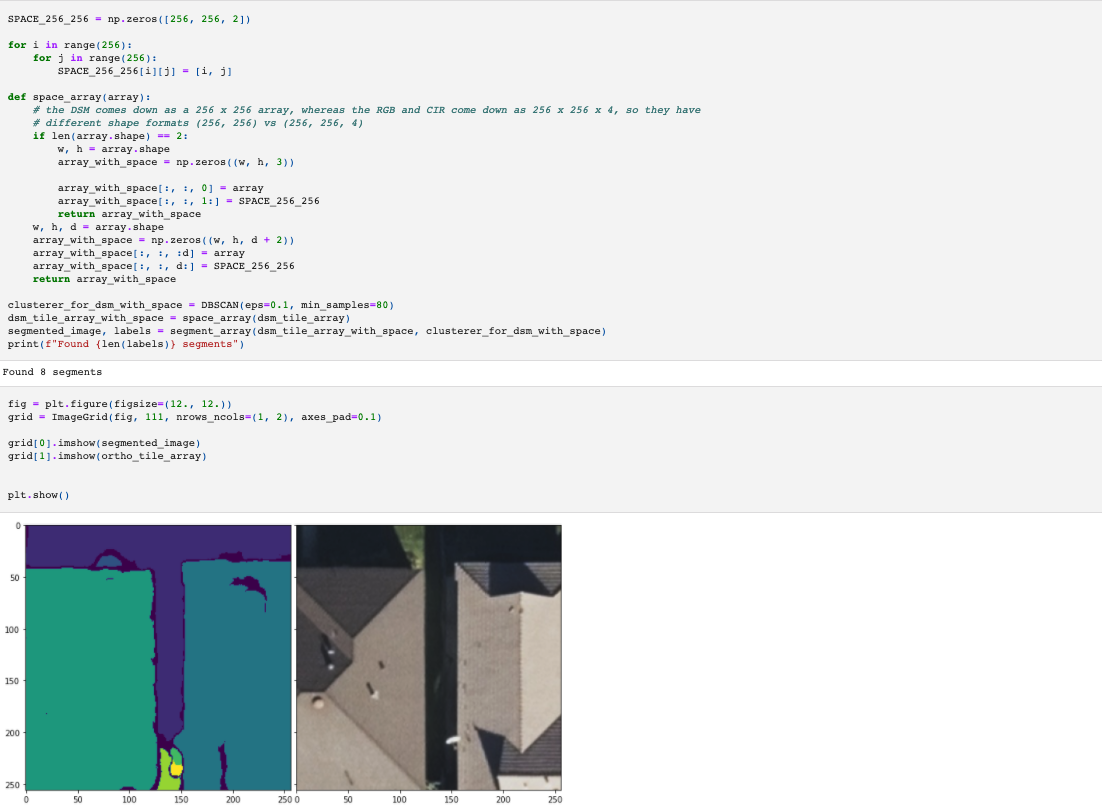

To correct for this, we can just add the spatial values back into the array. Now when we flatten the array, the spatial information will be maintained.

Now you can see that the image above on the left has a different color for each roof. By including the spatial information, DBSCAN distinguished between pixels that were far apart spatially.

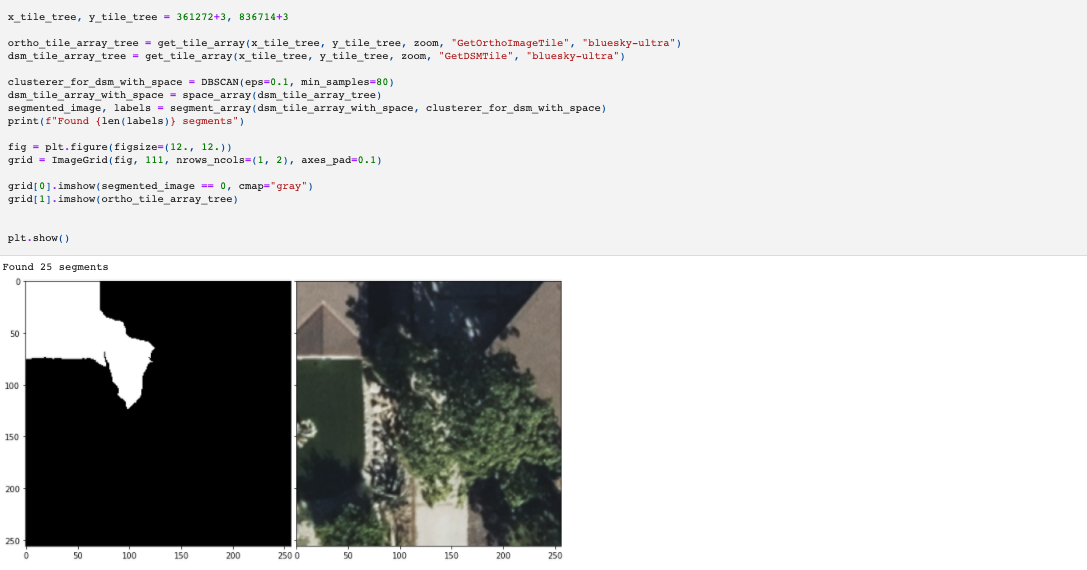

The other challenge of working purely with elevation data is that there are cases where two adjacent objects that are visually distinct have a continuous combined elevation profile, and so appear to be continuous, see the example below where the roof segment continues into the tree.

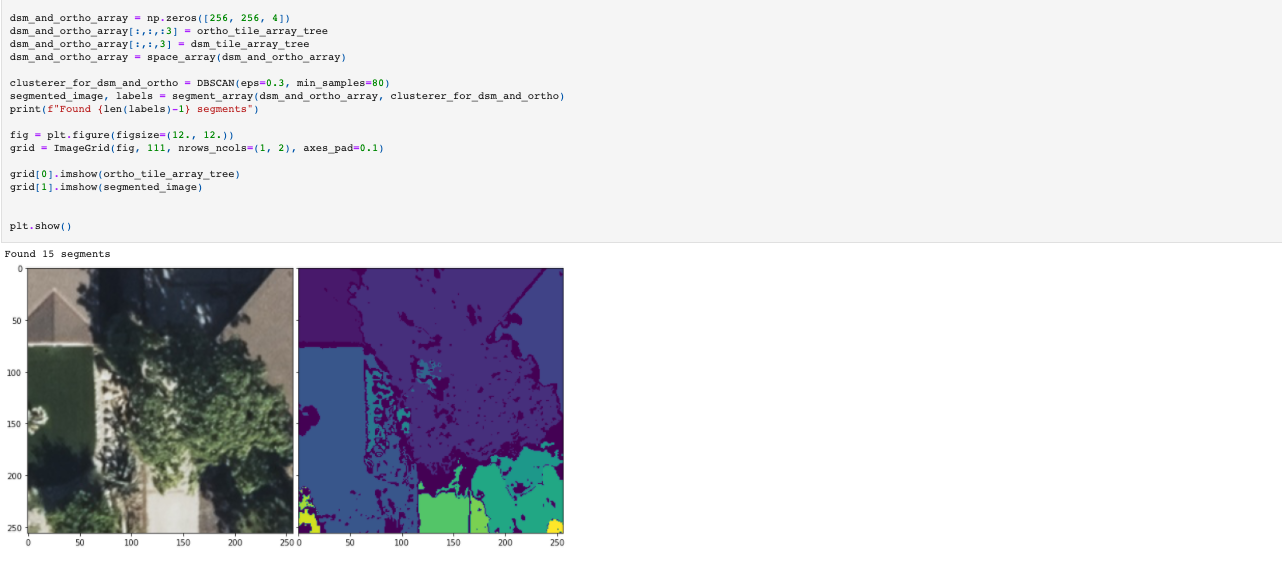

In the image on the top left, we can see the white pixels forming that cluster extending into the tree. To rectify this, we just add the RGB imagery to the array and the clustering algorithm gets better at distinguishing between the tree and the roof.

So now we’re at a point in the analysis where we’ve got a nice method for segmenting images. The final step within the reference frame of the images are to draw lines around each of the segments. To do this, we just use sklearn.measure.find_contours.

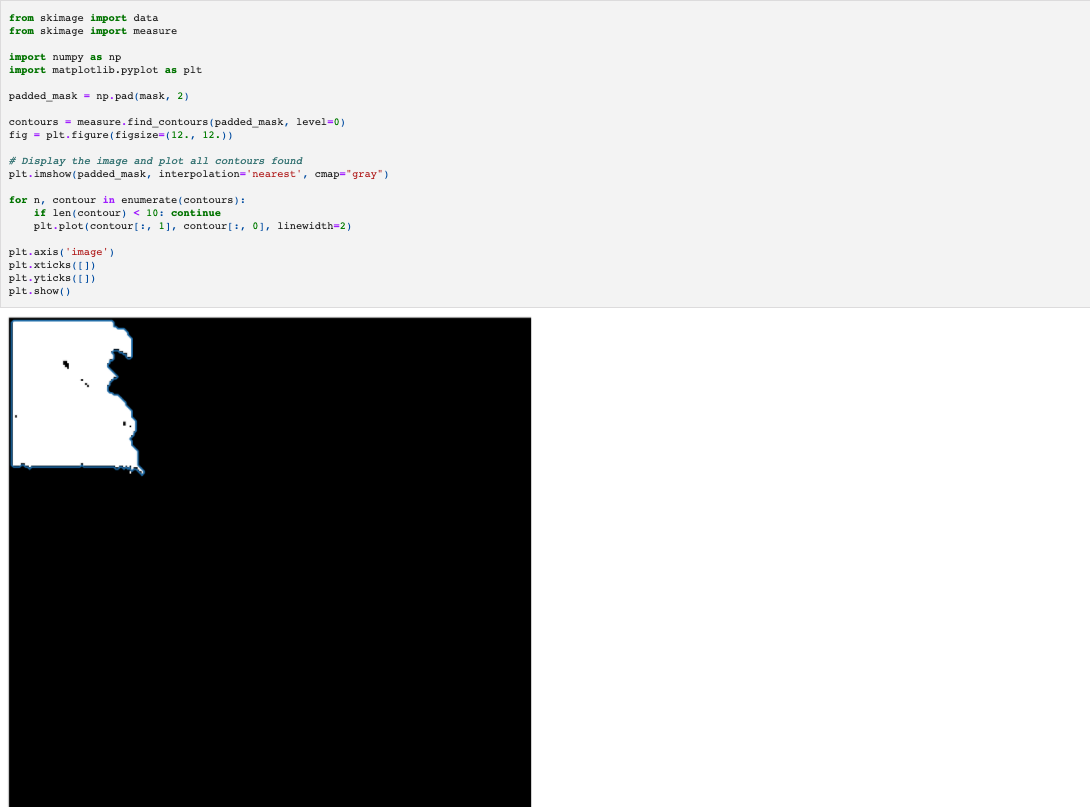

What you can sort of make out in that image is that there are no contours going around the edge of that white feature which is the roof. We want that boundary, so repeat the above but with some padding around the image to give the contour finder a well defined edge.

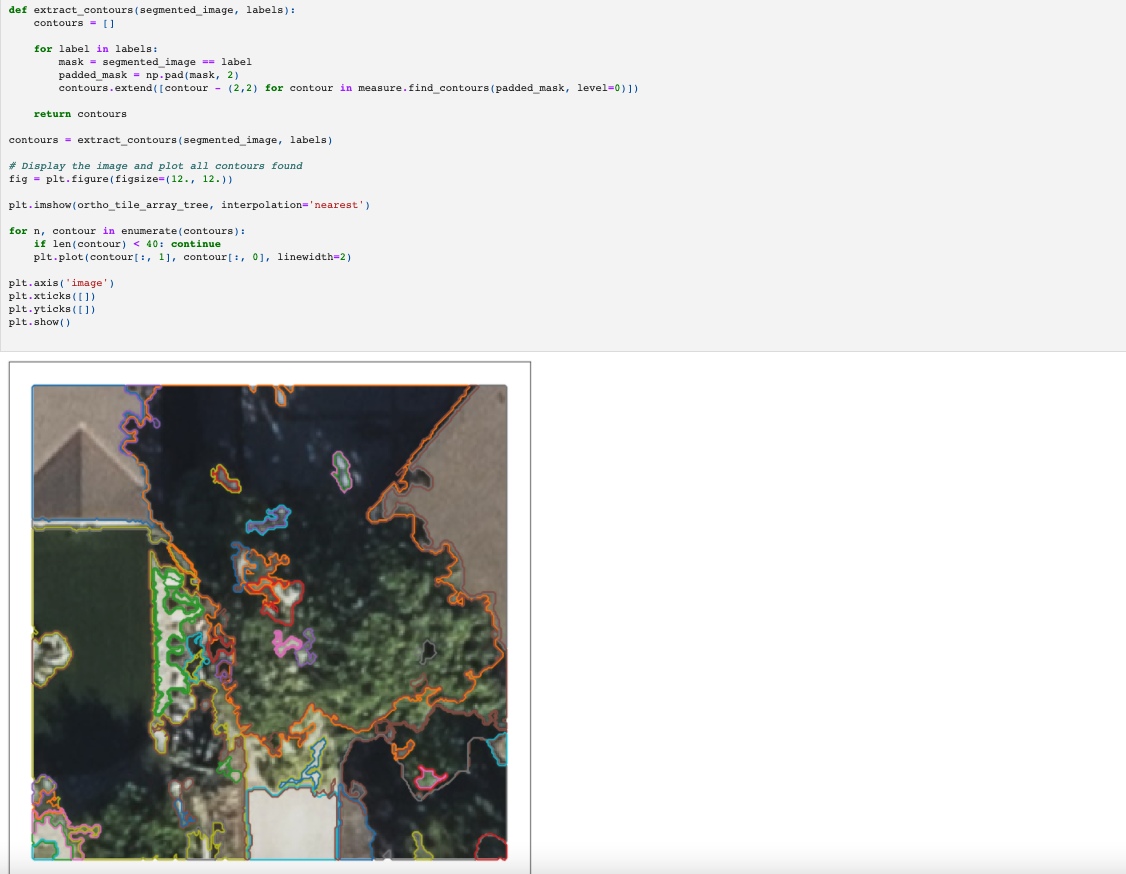

Since we’re going to be using that code to pull out a contour a lot, define a function and run it over all the segments which make up the imagem.

At this point, all of the machine learning work has been done. There are certainly improvements to be made, but the goal with this article is to show how to get from geospatial to pure machine learning, and also back again which is what the next section is about.

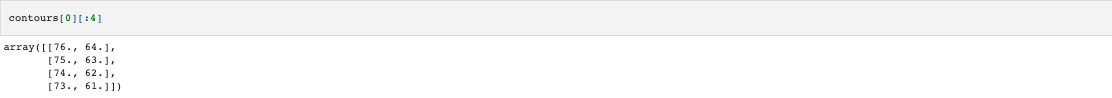

The contours above are just coordinate sequences within the 256 x 256 pixel reference frame of the image. E.g the sequence below encodes a line starting from row 0, column 50 to row 1, column 50, to row 2 etc.

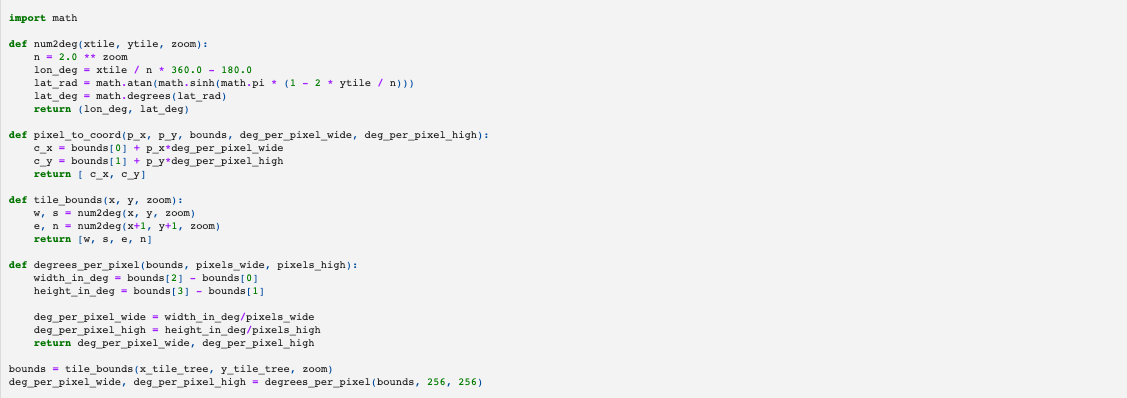

Now what we do is take the coordinates from the pixel reference frame, and combine what we know about where these pixels are located on earth. The functions below take care of all of the mathematics.

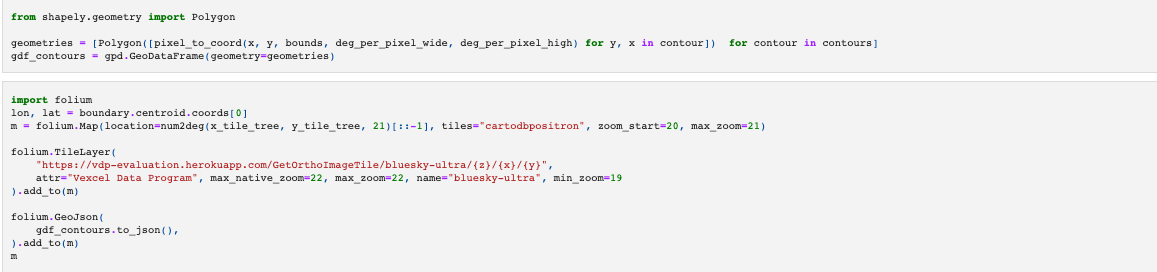

Again, we use Shapely to handle all of the spatial objects. We also use GeoPandas to create a GeoDataFrame containing the geometries. GeoPandas then creates a GeoJSON read by Folium to display a HTML map in the notebook.

Putting it all together

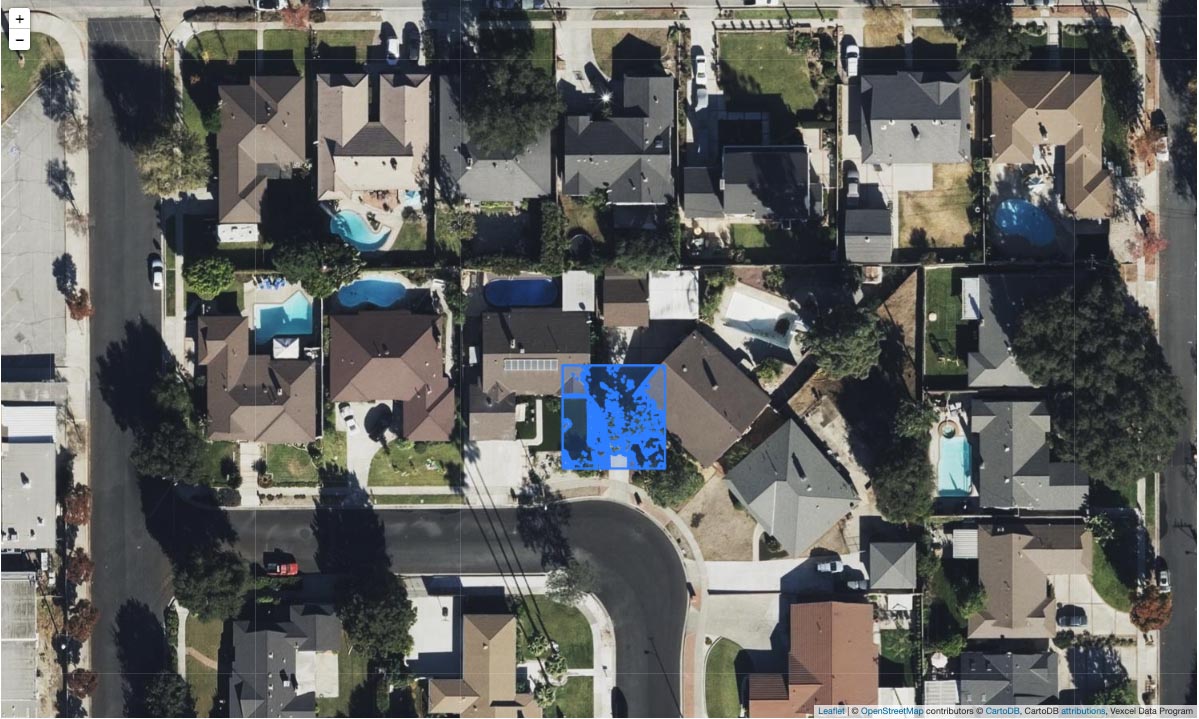

So far we have a set of contours on map that highlight features for a single tile. To do this over large area, we just repeat for multiple tiles. We can also add in some extra steps to ensure that the elevation data within each contour is being captured. The function below does the following, for every map tile in our AOI.

- gets the spatial extent of the map tile and calculates how many degrees wide and high each image is. This information is used to transform the pixel coordinates to geospatial coordinates

- gets the tile arrays for DSM and Ortho imagery for this tile

- preprocesses the data into a single array with spatial information

- instantiates the clustering algorithm

- segments the image with the clustering algorithm

- generates the contours

- transforms the contours from their 256×256 image reference frame to the spherical earth reference frame (yes, the earth isn’t a perfect sphere, but the standard reference frame for map tiles makes that assumption)

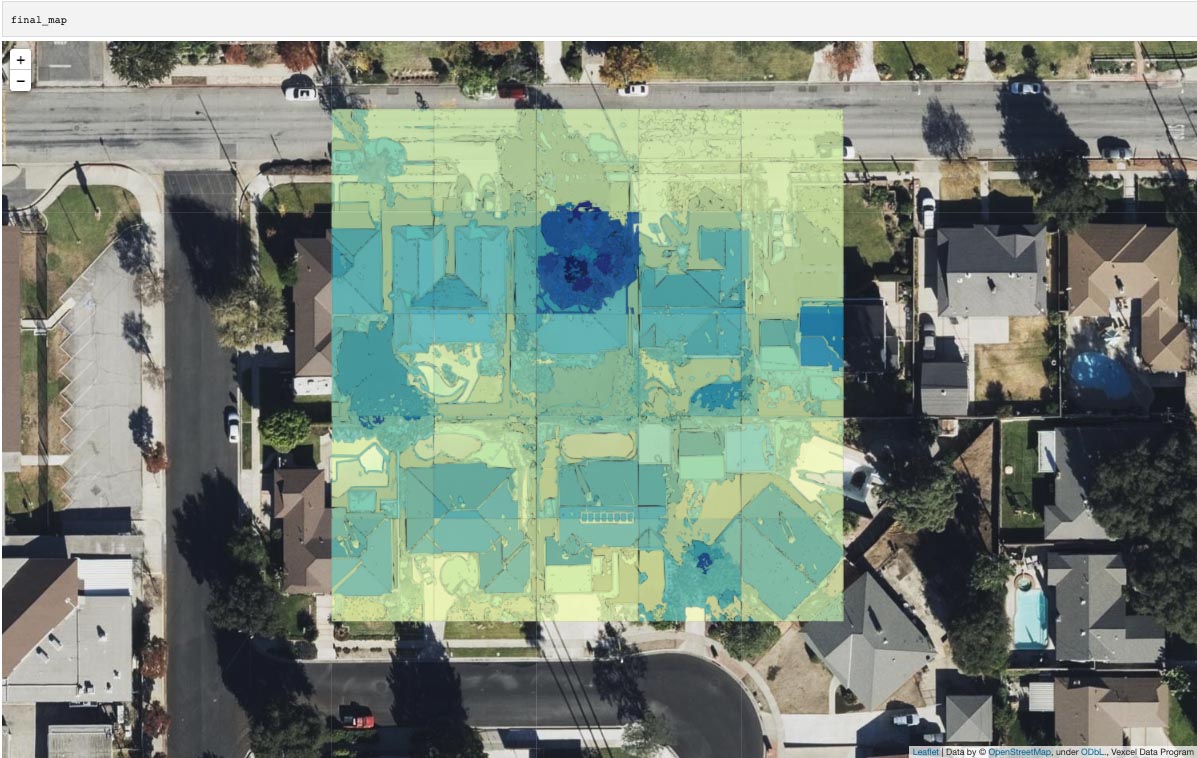

Finally, we run the function above through a for loop, create a GeoDataFrame and pass that to Folium to visualize the features where the color varies from yellow (minimum elevation, ground) to dark blue (highest elevation, trees).

Hopefully after reading through this article, you have a greater understanding of how to work with geospatial data to do machine learning tasks like image classification and feature extraction. Hopefully you can see the advantage of using multiple types of data captured from remote sensing. Using RGB or elevation alone can lead to an incomplete picture of the world. The better the information we can give to algorithms, the better the results.

Some potential next steps if you wanted to continue this analysis:

- investigate alternatives to DBSCAN, including the use of more advanced classifiers including supervised learning

- use PostGIS to merge the geometries that neighbor each other when split by tiles. PostGIS’ support of 3D geometries mean methods like intersect is available in 3D unlike with GeoPandas

- add the elevation value to the contours to create 3D polygons to generate 3D models of buildings and trees